Research Overview

Our lab is interested in

understanding the everyday forms of intelligence that make humans so smart.

Despite recent advances, human intelligence still vastly out performs the abilities of our most intelligent

machines such as robots, driverless cars, and virtual assistants. We want to understand why that gap exists

and how to narrow it. However, what does it mean to "understand" something like intelligence?

We think the best standard for understanding is building a system with this property -- to engineer it --

while also respecting core explanatory principles. Thus, our lab aims to understand biological intelligence

(e.g., humans, animals) by building model systems that exhibit the types of agile intelligence we possess.

Fundamentally human intelligence is about manipulating information in our minds. One compelling way to

reverse-engineer intelligence is to build computer models or simulations that try to match the behaviors of

humans.

This is why our lab is called "Computation and Cognition Lab." We try to reverse engineer the

computational processes and representations that underlie human cognition and

intelligence.

This field (computational cognitive science) is exciting and requires a particular

type of scientist. It often appeals to those who like to synthesize ideas from many different areas of science

(e.g., psychology, machine learning, artificial intelligence, computer science, mathematics, philosophy, and

neuroscience).

In our actual research projects, we try to unpack these bigger questions into manageable pieces. A typical

research strategy focuses on a particular behavior that we can characterize well with human behavioral

experiments (e.g., how people learn and make decisions or how they ask questions). We then try to build a

computer model that recreates that ability. We frequently focus on human behaviors that are challenging for

current machine learning systems because this is where the real action is -- how can we extend computational

approaches to intelligence to encompass these abilities as well?

Our lab has several broad research themes:

1. Self-directed/Active Learning

Unlike today's machine learning systems, children are remarkable in their ability to bootstrap knowledge

from independent, self-guided interactions with the world. They ask questions, curiously explore new toys,

and push at the boundaries of what they currently know on their own, often without a teacher or parent.

Our lab has been interested in understanding the nature of this human ability in computational terms. How

can we figure out how something works by tinkering with it? How do we formulate and ask creative questions

that reveal helpful information about abstract concepts? How do we judge what actions we can take to

obtain helpful information? How do our choices to gather information affect our learning and memory? How

do we creatively propose new tasks for ourselves and learn from those to extend our abilities? Moreover,

how do these abilities develop across the human lifespan?

Our lab is often inspired by research in machine learning which seeks to develop machines with intrinsic

abilities and desires to learn. For example, the field of active machine learning studies learning

algorithms that learn by choosing which examples are likely to be informative. Similarly, the field of

intrinsic motivation examines how to give machines the capacity for exploration and discovery.

Imagine a computer that could program itself, building competencies as it gave itself new challenges. This

is precisely the adaptive and life-long learning abilities that young children have! Our hope is that by

studying how children and adults accomplish this, we can one day realize this vision.

2. Mental Representations as Symbolic Programs

There have been many proposals about the nature of human mental representations—for example, vector

spaces, neural networks, abstract structures like graphs, and trees. One somewhat underexplored idea is

that some parts of our

mental representations use bits of programs

just like one might write code in Python or Javascript. The idea behind this hypothesis is quite old,

going back to the earliest days of the fields of AI and cognitive science (often called Symbolic AI or

"Good Old Fashioned AI"). However, there has recently been a bit of a revival of interest in this idea.

So what can we do with programs? One exciting task, prevalent in AI/ML, is program induction. In

program induction, an agent (computer or person) sees either the output of a computer program or

input-output pairs showing and tries to infer what the underlying program is. This seems like a

challenging game to play, but something similar likely underlies many of our everyday inferences. For

example, we infer how other people will respond to us primarily based on input-output pairs without access

to the underlying behavior program the other person uses. Instead, we infer how other people think

and act based on behavior. Another example is that we rarely completely understand technological

artifacts, like our cars. However, we understand, based on input (pressing on gas or brake) and output

(speed of the car), some underlying rules that govern driving.

For the last several years, we've been interested in how we can use this concept to understand various

aspects of human behavior. For example, in Rothe, Lake, & Gureckis (2017), we used program-like

representations to model how people ask informative questions while learning. In Bramley et al. (2018) we

explored how people explore hypothesis spaces composed of program-like structures. More recently we've

been focused on studying human behavior in program induction tasks like the one described above. Finally,

some recent work has used program-like representations to represent goals and how people propose and

design games (see the section on "Human RL").

3. Intuitive Physical Reasoning

Humans have a remarkable ability for commonsense reasoning about the physical world. We can judge when a

cup left precariously on the edge of the sink may fall in, when it is safe to cross the street, and how to

play games with balls, discs, and other toys. Each of these abilities requires predictive and inferential

processes related to how aspects of the physical world unfold -- how objects move or do not move and

otherwise interact.

The goal of our research in this area is to understand better the representations and processes that the

mind uses to reason about physics. People are unlikely to directly compute the relevant physical laws

(things that took centuries of human ingenuity to identify and write down). Instead, we have some innate

mechanisms for processing objects and their motions. What are these mechanisms?

One helpful approach when addressing these questions is to attempt to identify constraints on the system.

Does it make mistakes concerning the ground truth of the physical world? If so, these mistakes might help

us understand the unique architecture of human physical reasoning. In collaboration with Ernest Davis, a

computer scientist at NYU, we have written a few papers exploring the limits of people's intuitive

physical reasoning. One exciting result is that we found a new type of conjunction fallacy reasoning for

simple physical situations (Ludwin-Peery, Bramley, Davis, & Gureckis, 2020)!

Our approach has led to an "adversarial collaboration" with with

Kevin Smith and

Josh Tenenbaum, evaluating the limits and advantages

of different approaches to modeling intuitive physics. Several projects flow from there, including how

people infer from the motion of some objects the existence of other occluded objects, and how people

"experiment" with the physical world to uncover latent properties of objects.

Representative papers:

4. Human Reinforcement Learning

Reinforcement learning is a subfield of AI concerned with how agents can learn to make good decisions

based on evaluative feedback (i.e., scalar rewards). There is a rich literature on both the cognitive and

neural basis of RL in humans. In our lab, we have studied human decision-making from the perspective of RL

for several years now. This includes how people learn representations of their world to enable effective

RL, how people reason and plan (particularly in social settings), and how people can become "trapped" when

learning to avoid negative outcomes.

Looking forward, there are several future directions. It has long been recognized that people learn more

efficiently than most RL algorithms (e.g., recent deep reinforcement learning algorithms have to play

games millions of times in order to match the performance of a human that plays only a handful of games).

Understanding how human learning is more efficient is thus an exciting topic with direct application to

computer science/AI. However, there are other interesting differences between human and machine RL as

well.

For example, we have explored the interaction between language and reinforcement learning. RL primarily

studies how we learn what actions to take in different situations. However, we also receive instructions

to perform tasks or hints on how to do things in the form of language. Translating between language

representations and action policies that can be further adjusted or influence RL is a currently

understudied topic.

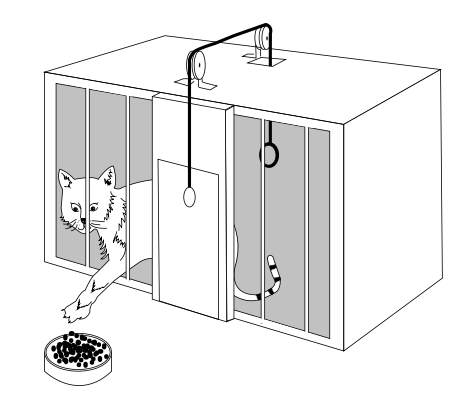

As another example, the external world does not always provide obvious rewards to us (e.g., when children

engage in unstructured play). We are interested in how people generate goals that they then can solve and

how these goal representations might be more efficient and natural than specifying reward functions.

Finally, we are interested in cases where both machine RL and humans get "stuck" when learning, adopting

stable suboptimal behavior patterns. We call these examples "learning traps," and they specifically arise

in situations where humans learn from positive and negative feedback and have to generalize knowledge from

one experience to the next. Understanding why we get "stuck" may help us understand how to get people

"unstuck," an application with practical societal relevance, including reducing the formation of negative

stereotypes.

Sample Projects

Phew! That was a lot. Still want more info? To give you a better sense of some of the basic scientific

questions we are interested in, read these high level introductions to some of our recent projects:

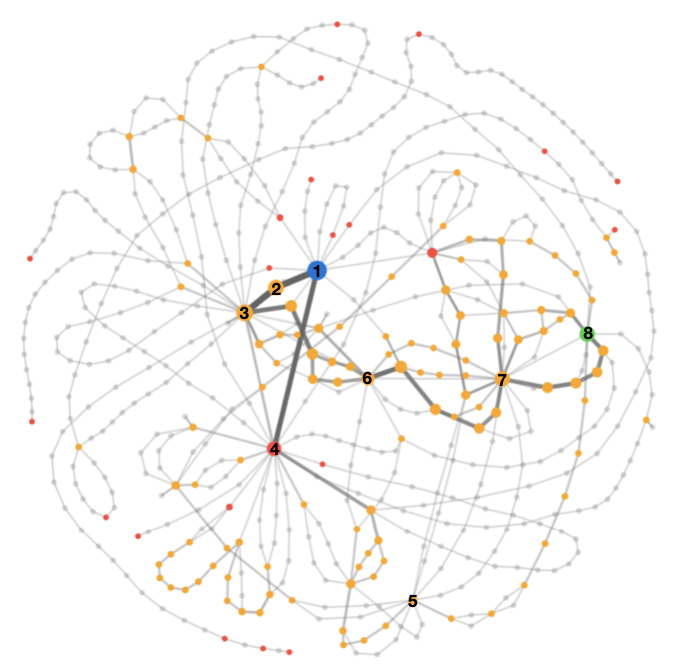

Keywords

The following set of keywords has been applied to our past papers. Click one to see a selection of papers on

this topic.

Getting involved

Chances are if you are reading this you are interested in joining the lab or getting involved in our research

efforts. We've put together a handy guide with information about how to get

involved.